Overall impact of Signed Exchanges on page load speed—a data-driven study

Boosting page speed for Google traffic with Signed Exchanges (part 9 of 10)

Context (Oct 2025): After deep work with SXG, I suspected changes might be coming—just not this quickly. Cloudflare announced SXG deprecation, and I observed SXG stop working around Sept 19, 2025.

If you still want SXG, the current path is to self-host—but the tooling looks abandoned, and setup is non-trivial. Also, Google may follow Cloudflare by shutting down the SXG cache and removing SXG support in Chrome.

In the previous part, we examined how different page load types affect performance for Google-referred users. The results were surprising: while some methods achieved sub-second load times, others actually degraded performance compared to standard loading.

But here's what really matters: your website doesn't use just one loading method. In practice, visitors experience a mix of these page load types, with the distribution depending on your technical setup, caching configuration, and which optimizations you've implemented.

In this post, I'll analyze how this mix affects overall Largest Contentful Paint (LCP) performance using real-world data from my site. My primary goal is to answer a critical question: when we account for all the different ways pages actually load, does Signed Exchanges (SXG) improve or harm overall performance?

This post is part of my series on SXG—a technology that can make your website load dramatically faster (and sometimes slower) for users coming from Google. If you’re thinking about implementing it, start here.

TL;DR

For Google-referred users:

Proper SXG implementation can bring the average LCP below 1 second

Almost half a second is possible by boosting SXG

Poor SXG configuration can make things much worse (+500 ms)

HTML edge caching provides consistent benefits regardless of other optimizations.

Your mileage may vary

The data and conclusions in this post come from my specific website setup, serving primarily Polish users with particular CDN and caching configurations. While the patterns I observed may apply to similar setups, your results will likely vary based on your infrastructure, user geography, and implementation details.

Measuring frequency of page load types

The measurement data I collected allowed me to compare the frequency of page load types in different configurations. I extended the spreadsheet from the previous post to include frequency data and overall estimations. You can download it below.

For more information on the data collection methodology, please refer to my previous posts.

Let me first examine how different configurations performed.

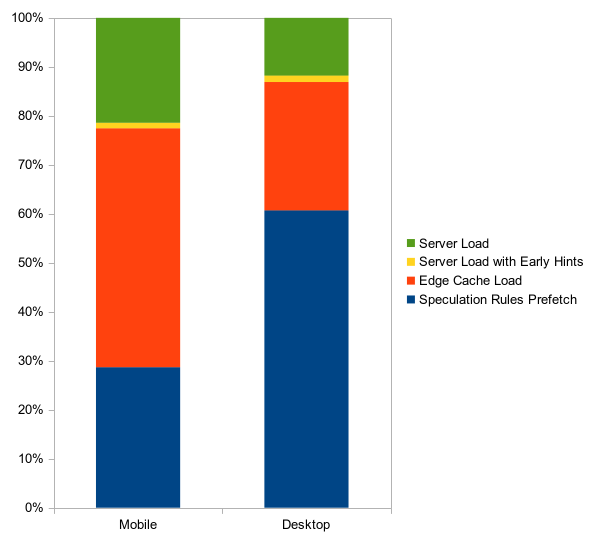

Standard setup with edge cache

For a short period, I disabled SXG for testing purposes. This way, Google-referred users visited my website using only the following methods:

Speculation Rules Prefetch

Edge Cache Load

Server Load with Early Hints

Server Load

The chart below visualizes the proportions of page load types.

My observations:

Pages were prefetched using Speculation Rules twice as often on desktop (61%) as on mobile (29%). The explanation is simple: in addition to prefetching the first 2 results, the desktop uses prefetching on hover.

Most of the on-demand page loads use Cloudflare edge cache, ensuring fast load speed. This confirms that my website's cache utilization is optimal.

Early Hints are almost non-existent in the breakdown. This suggests that when you use HTML caching, Early Hints provides minimal performance benefits, as discussed in the previous part. For this reason, as well as for more readable charts, I omit Early Hints in further discussions.

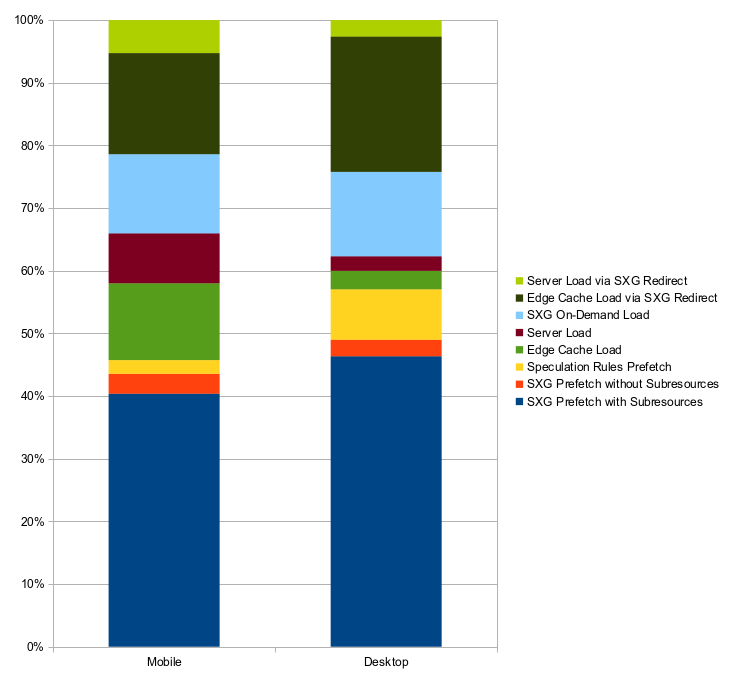

Standard SXG setup with edge cache

Most of the time, my website utilizes SXG, as well as other performance-improving technologies, including edge cache and Speculation Rules prefetching.

I reused the data I measured for performance analysis to calculate frequencies of page load types. Here they are, followed by my comments.

SXG was used for most of the visits

All SXG-related page views accounted for 78-87% of total traffic, depending on the device category. In other words, only 13-22% Google visits were normal or prefetched using Speculation Rules.

It's worth noting that SXG was used more frequently on desktop than on mobile by 9 percentage points, possibly due to different user behavior patterns. However, if you have a better explanation, leave a comment below.

Half of the visits used prefetching

Prefetched pages accounted for 46-57%, making about half of the visits better than normal loads.

Fully prefetched page loads (the best possible experience) covered 40-46% of traffic!

Speculation Rules prefetching was almost eradicated

For SXG-enabled websites, Google prioritizes this technology. In other words, SXG cannibalizes Speculation Rules prefetching.

As a result, Speculation Rules prefetching was used for only 2% of mobile and 8% of desktop traffic.

Performance degradation caused by SXG affected many users

13% of mobile page views used SXG On-Demand Load, which degraded performance compared to normal load. As I explained in the previous part, it didn’t affect the desktop in my setup.

SXG Redirect impacted 21% of mobile visits and 24% of desktop visits.

If we sum the above numbers for mobile, we end up with 1 in every 3 users experiencing worse performance!

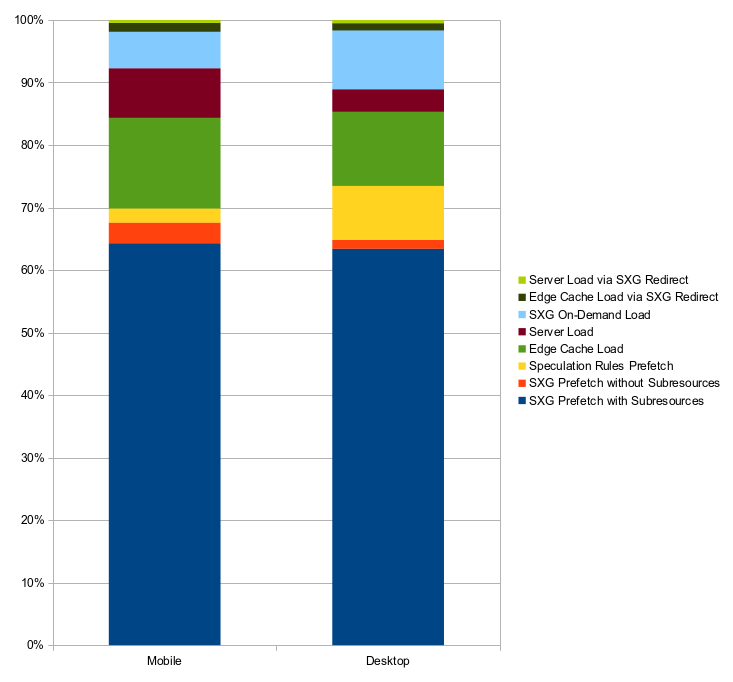

Boosted SXG setup with edge cache

After seeing the results, I began to wonder how to improve the overall performance. Increasing the share of fully prefetched pages and decreasing the share of SXG fallback redirects would probably help.

One way to achieve that is to increase the expiration times of pages. If a given page is kept longer in the SXG cache, then it’s more probable someone will prefetch it.

However, I decided to keep my current 24-hour expiration time. Additionally, I built a system that continuously and actively feeds Google SXG cache with a large set of pages—effectively boosting its utilization.

This drastically improved the mix of page load types as visualized on the chart below:

It almost eliminated SXG fallback redirects by bringing it down to less than 2%.

At the same time, the ratio of prefetched pages increased to 70-73%, while the fully prefetched loads took 63-64%.

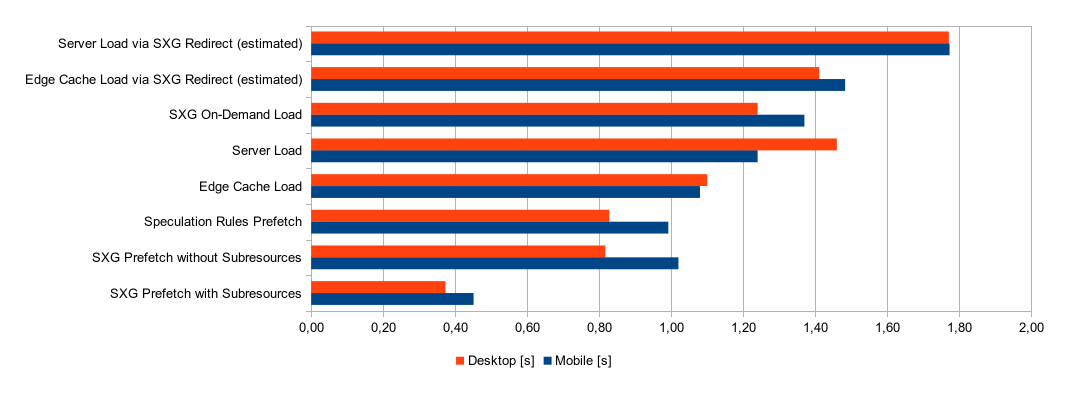

Overall LCP

We have the frequencies (as presented above) and average LCP for each page load type (on the chart below, discussed in detail in the previous post).

These data allow me to calculate the overall average LCP for Google-referred visits for each configuration. It’s simply a weighted average. This gives us the real-world performance impact, accounting for how often each loading method actually occurs.

However, before doing so, let’s summarize the configurations:

Standard + edge cache: The only improvement over the default (normal loads and prefetching with Speculation Rules) is edge cache for HTML responses

Standard + edge cache + SXG: As above, but with properly implemented SXG.

Standard + edge cache + boosted SXG: Same, but with a system boosting SXG cache utilization.

Other configurations

In addition, the measured data for the three configurations above allow us to simulate different configurations. We can do this by replacing some of the page load types with others for a given base configuration, creating a new configuration.

This way, we could simulate how my website would perform in more common setups, such as without edge cache for HTML, by replacing Edge Cache Load with Server Load and calculating the average LCP.

The other simulation could show us what the LCP would look like if we went back in time to the days before prefetching. We could also simulate poor SXG implementations to see how they impact the average LCP.

Here are the 4 additional configurations I simulated:

Good ol' days (no prefetching, no edge cache): just plain old Server Load for everything

Standard: the way most websites work by allowing Google to prefetch pages using Speculation Rules and not bothering with edge cache for HTML

Standard + poor SXG (0% SXG cache utilization): as above, but with SXG enabled and at the same time crippled by too short expiration times, causing the Google SXG cache to be empty most of the time

Standard + poor SXG (no subresources): same as Standard, but with partial SXG implementation, working properly only for HTML documents and not for subresources

Performance results of different configurations

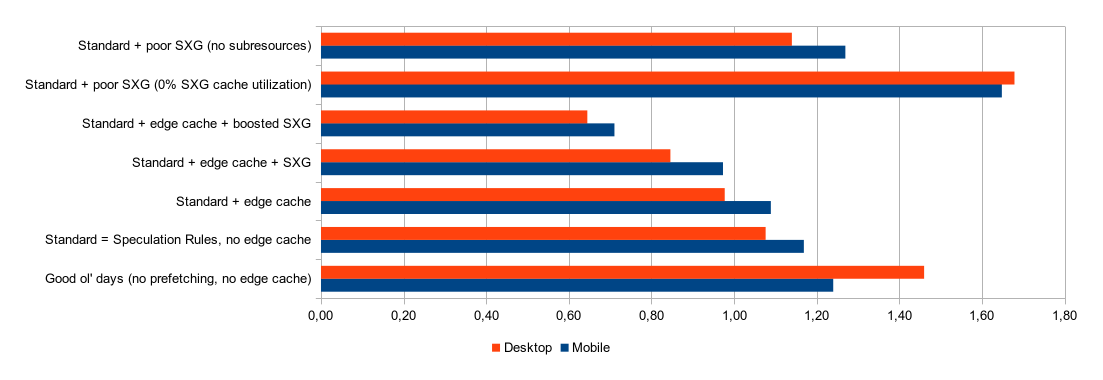

Here are the overall, calculated LCP values for my website.

Keep in mind these are averages, not 75th percentiles. The 75th percentiles would likely be higher, but I won't attempt to estimate them due to the potential for significant error, at least for some of the configurations.

Proper SXG implementation improves the overall performance

Despite side effects, the overall impact of SXG on average LCP is positive. Compared to the standard configuration (Speculation Rules, no edge cache):

Mobile: -196 ms

Desktop: -231 ms

Boosted SXG has superior performance, but it’s not for everyone

Eliminating SXG side effects and improving cache hit rate paid off. The average LCP dropped significantly when compared to the standard configuration:

Mobile: -458 ms

Desktop: -432 ms

The result: pages display in ~700 ms for an average user coming from Google. That’s worth celebrating!

It’s worth noting that this solution makes sense as long as the number of pages you want to push to Google SXG cache is small enough. I don’t know the limits of this cache, but I imagine that for larger websites, they may eventually be reached.

Forgetting about SXG subresources is a mistake

The simulated configuration with disabled prefetching of subresources performed suboptimally. In comparison with the standard setup, the average LCP increased:

Mobile: +100 ms

Desktop: +64 ms

While not terrible, this raises the question: What's the purpose of implementing SXG if the results are worse, even slightly?

If you decide to use SXG, it’s critical to implement subresource prefetching. Otherwise, instead of improving your website's overall performance, you will degrade it due to SXG side effects. If you use SXG only to prefetch HTML, Speculation Rules prefetching will do this job much better.

But there is a mistake that will cost you a lot more.

Make sure you set proper expiration times for SXG

The simulated scenario with 0% SXG cache utilization, which could be caused in real life by setting too short expiration times for HTML, caused substantial performance degradation:

Mobile: +479 ms

Desktop: +602 ms

This is a result of a latency added by SXG fallback redirects.

If long expiration times are problematic in your use case, I can see only 3 solutions:

Using a long expiration time for a page, combined with fetching fresh critical parts client-side.

Using short expiration times and implementing a system to boost the SXG cache utilization aggressively (may not scale well, as mentioned above).

Forgetting about SXG, unfortunately.

Otherwise, your overall LCP will suffer.

Edge cache is a cherry on top

Edge caching improved performance, no matter if used alone or in combination with other performance optimization techniques. Compared to the standard configuration, the user experience was slightly better:

Mobile: -80 ms

Desktop: -99 ms

If the website has properly implemented SXG, enabling edge caching is a formality and an almost free ~100 ms LCP improvement.

Speculation Rules prefetching is positive

In the remaining setup, I simulated a scenario without prefetching. The results were worse than the standard configuration:

Mobile: +71 ms

Desktop: +384 ms

While the mobile improvement was slight, the desktop LCP decreased significantly because prefetching compensated for the Cloudflare TTFB issue I mentioned in the previous post.

That’s a good result given that it comes free. Thanks, Google!

Summary

I presented the overall impact of SXG on website performance, using my website as an example. I believe some of the observations I share in this case study should apply to other websites as well, but your mileage may vary. If you're considering implementing SXG, I hope I made it slightly easier for you to decide.

In the next and final part of the series, I will share my conclusions and thoughts on the SXG technology.