My conclusions after using Signed Exchanges on my website for 2 years

Instant page loads for Google visitors using Signed Exchanges—part 10 (final)

Update (context): After finishing this article, I learned that Cloudflare plans to deprecate Signed Exchanges (SXG).

Recently, as I dug deeper into the SXG ecosystem, I suspected this might be coming—just not this quickly. Still, SXG proved technically solid on my site and delivered meaningful performance gains. My conclusions below reflect two years of hands-on use.

If you still want SXG, the current path is to self-host—but the tooling looks abandoned and setup is non-trivial. Also, Google may follow Cloudflare by shutting down the SXG cache and removing SXG support in Chrome.

Signed Exchanges (SXG) is a technology mainly used to improve page load speed for Google-referred users.

I enabled it for my website in October 2023, resulting in substantial improvements to the Largest Contentful Paint (LCP) metric.

I couldn’t find comprehensive, developer-focused documentation, so I set out to write my own SXG implementation guide. I initially planned three posts. I didn’t expect it to turn into 11 deep-dive articles (10 parts plus one extra), 20 interactive demonstrations, 4 related open-source projects, plus detailed performance data and extensive reverse engineering.

In this post, I summarize my research and experiences with SXG. Where appropriate, I compare it with other performance-improving technologies used by Google: Accelerated Mobile Pages (AMP) and Speculation Rules prefetching.

SXG advantages

SXG enables cross-origin prefetching of entire pages with subresources while maintaining users’ privacy.

Websites are expected to serve pages in a special format that’s consumed by so-called link aggregators (such as Google), which perform the actual prefetching, or more specifically, the prefetching is done by browsers as instructed by link aggregators when visited.

Page load speed

With SXG, an entirely prefetched page displays almost immediately after the user navigates to it, resulting in a great user experience, even on a slow connection (assuming the prefetching is completed before navigation).

Below, you can see an extreme example featuring going offline and navigating to the prefetched website without issues.

This is a vertical video, so if you are using a mobile device, click here to open it in the YouTube app for best experience.

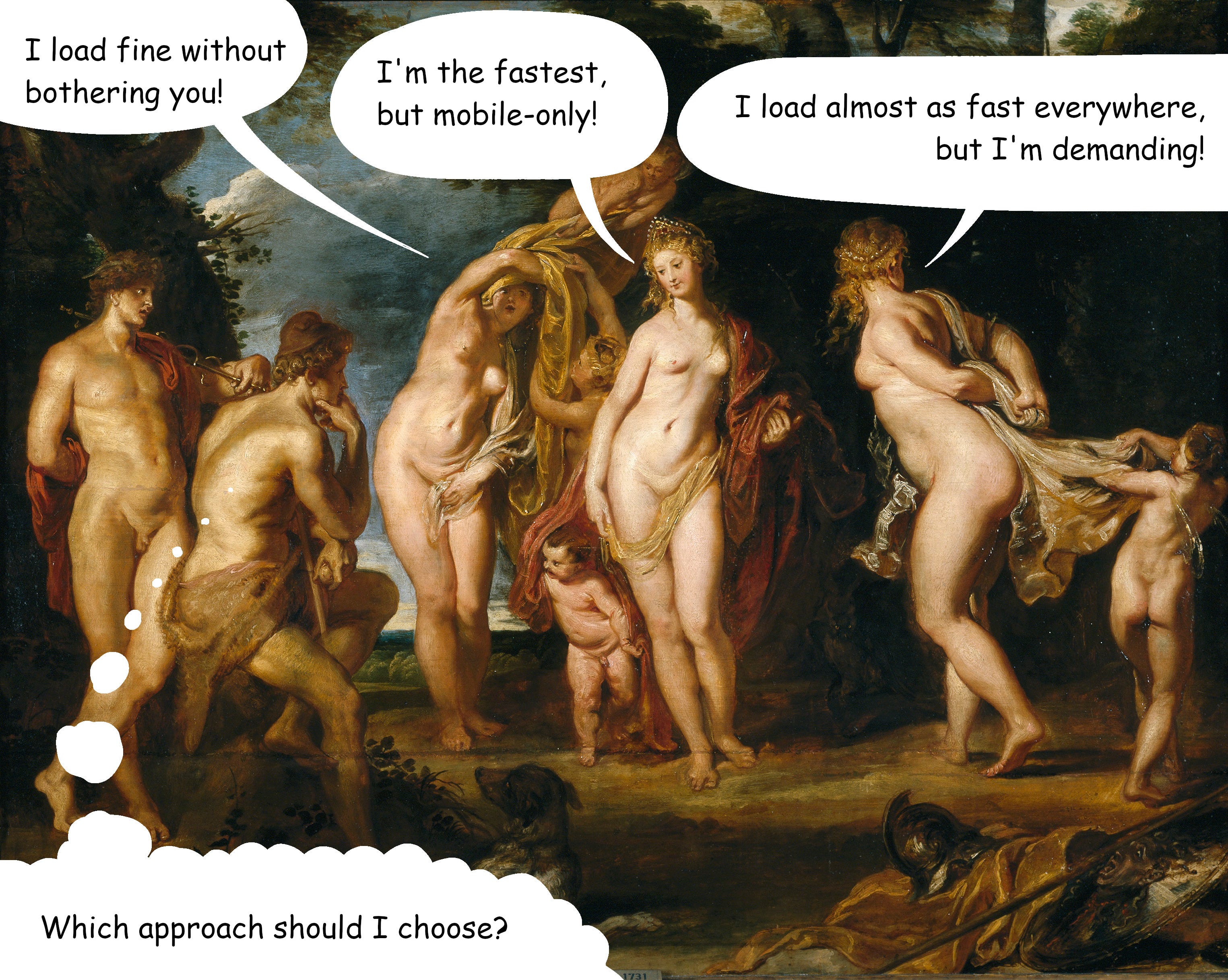

When compared to similar technologies in use today, only AMP offers a better performance at the cost of severe limitations and trust issues. Speculation Rules prefetching falls short mainly due to the inability to prefetch subresources, but also because it doesn’t work for returning visitors in most cases.

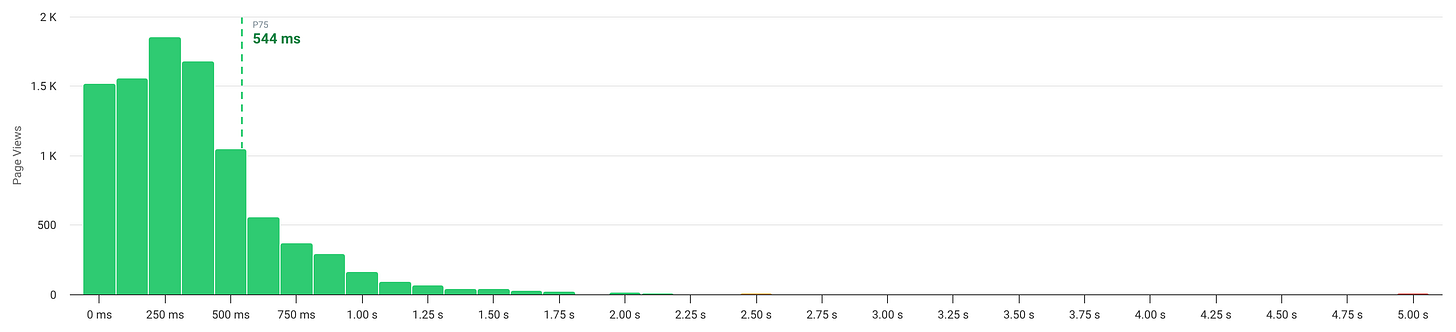

Theoretically, if used to its full potential, SXG could allow 75% of my users to experience LCP of under 544 ms.

In practice, I achieved an average overall LCP for Google-referred users of around 700 ms. While calculating the exact 75th percentile is complex for reasons discussed in previous posts, I guess it's under 1 second—still a solid result for a high-traffic, image-rich production site!

If you want to know more about this topic, I wrote a detailed post on SXG performance measurements.

User engagement

SXG prefetching showed significant user engagement improvements in my testing, which I attribute to better page load speed.

I won’t present a full report here; instead, I'll highlight the key user engagement metrics I measured for my website, focusing on mobile, which constitutes the majority of overall traffic. Your results may be different.

When compared to loading a page on demand, prefetching a page with subresources using SXG increased views per session and average session duration by 36%. At the same time, it reduced the bounce rate by more than a quarter (27%).

For comparison, Speculation Rules prefetching improved views per session by 15%, average session duration by 11% and decreased bounce rate by almost 19%.

These metrics represent best-case scenarios. Real-world impact will be lower due to mixed page load types, though SXG's benefits remain substantial.

Security & privacy

AMP doesn’t look good

In the case of AMP, Google controls the entire page to be prerendered (including subresources) and has the power to alter it. The user has to trust Google or avoid using AMP entirely.

Another problem with AMP is that the URL of the page points to google.com instead of the visited page. As users interact with AMP pages, they are effectively taught not to verify the URL. This undermines the efforts of the security experts trying to teach the opposite.

I can see one positive side of having google.com in the URL. It’s like Google honestly saying: we control this website and we may alter it if we want.

A solution is to introduce SXG to the mix. Cloudflare even has a switch for that. But I failed to find any example of an AMP website using it.

Speculation Rules prefetching is ok

When it comes to the Speculation Rules prefetching feature implemented in Google search results page, it’s based on a so-called private prefetch proxy. The browser prefetches the page speculated to be navigated to. The prefetching requests go through a proxy to protect the user’s IP address.

The proxy uses the HTTP CONNECT request method. Assuming the website uses HTTPS and has a valid TLS certificate, Google can only see encrypted data, so no alterations are possible in practice.

While the IP address of the user is protected, the country information still leaks to the website due to the geolocation feature of the private prefetch proxy. This could potentially affect users connecting from countries that the website doesn't typically see traffic from, though the privacy impact is relatively limited.

SXG approach

SXG uses digital signatures to prevent malicious link aggregators (such as Google) from modifying data served to users. It also preserves the original URL, which is an important UX, security, and trust-building feature.

From the security standpoint, SXG is a huge improvement over AMP and is minimally better than Speculation Rules prefetching.

Impact on the server load

Organic visits

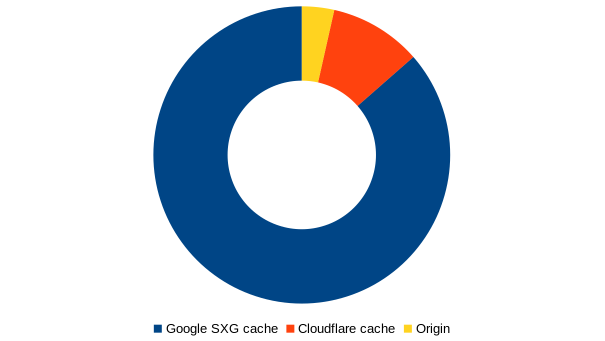

Google SXG cache performs the function of a CDN, taking some load from your website.

You may even completely stop seeing Google-referred traffic from browsers supporting SXG in your app logs, especially for popular pages or if you boosted your SXG deployment.

For example, the homepage of my website is mostly handled by Google.

Googlebot traffic

On the other hand, the Googlebot traffic increases substantially, and even more if you boost your SXG.

One reason is the maximum validity period for SXG subresources. Normally, the assets are configured to expire in a year or so, while SXG limits them to 7 days. Googlebot, therefore, has to download them much more often.

AMP

AMP can also reduce the load on your server. However, it only works on mobile devices, so it won’t help you with desktop traffic.

Speculation Rules prefetching

Prefetching using Speculation Rules slightly increases the load of your website, because your infrastructure has to process requests from both actual and potential visitors. There are ways to limit prefetching if it causes issues.

SXG disadvantages

Some page loads will be slower

On my website, properly deployed SXG improved overall performance compared to a non-SXG setup. But some users still experience increased LCP. I minimized this issue by boosting my SXG implementation, but I know it’s not possible for every website.

AMP likely has similar trade-offs, though I haven't verified this. Speculation Rules prefetching avoids this issue entirely since it doesn't involve cache and/or custom data formats.

Incomplete SXG implementation hurts performance

Enabling SXG in Cloudflare without proper configuration will likely hurt your page load speeds.

This means you need to set sufficiently long expiration times and configure subresources for prefetching—otherwise, SXG's overhead and interference with Speculation Rules prefetching will make performance worse, not better.

If you implement SXG, commit to doing it right. A half-hearted implementation is worse than none at all.

No respect for battery level and connection limitations

Speculation Rules prefetching respects user constraints—it won't trigger when devices are low on battery or in data-saving mode. This strikes a sensible balance between performance and resource conservation.

My tests show that neither SXG nor AMP honors these constraints. This is particularly problematic since both techniques consume more data and CPU resources than HTML-only prefetching.

Implementation challenges

Server-side personalization is not allowed

The primary challenge in implementing SXG is shifting away from server-side personalization.

The page HTML should remain unchanged, regardless of whether the user is a first-time visitor, logged in, or has items in their cart. Updates to the base HTML should be handled client-side, with the frontend requesting data from the backend.

I think setting these constraints is valuable because it pays off when you decide to cache HTML, which—when combined with CDN—can greatly reduce server load.

However, this approach may introduce complexity and other development costs. Also, some applications are simply not compatible with these constraints.

Framework friendliness

Web frameworks have different opinions on where the personalization should happen. For example, Ruby on Rails encourages developers to do most of the work on the server, while Next.js embraces client-side.

As a person preferring Ruby over JavaScript, I understand the Rails approach to maximize the Ruby part of the app (the server) while minimizing JavaScript (the client). On the contrary, Next.js uses the same language on both sides of the network connection and is heavily reliant on React, a frontend-focused framework.

When reading the first two parts of this series, you could notice that forcing Rails to generate SXG-compatible HTML was a lot of work, while Next.js required only cosmetic changes. On the other hand, Rails has SXG-friendly subresources by default, while adjusting them in Next.js was a real pain involving the use of Cloudflare workers.

Insufficient documentation

As I write this post, there is no Wikipedia article on SXG. If you search for SXG documentation, you won’t find many practical, developer-focused resources.

There is a standard draft, a few valuable articles from the web.dev, Chrome, Google, and Cloudflare blogs and knowledge bases, and technical documentation scattered across SXG-related Github repositories. A lot of remaining results point to low-quality, generic blog posts written primarily for SEO purposes.

No one talks about the real-world issues with subresources that have a major impact on the overall effectiveness of SXG.

I found several instances of outdated1 or downright incorrect information.

This series was created to address the above issues.

Quirks and bugs

One of my posts focuses solely on debugging SXG, while three others are simply detailed bug reports with workarounds. In summary, 40% of this SXG guide deals with bugs and quirks.

The good news is that most of these issues aren't fundamental to SXG technology itself and could theoretically be addressed by Cloudflare, though we remain dependent on Cloudflare's willingness to implement such fixes.

It's also possible to address most of these challenges by creating a specialized Cloudflare worker that would transform all website traffic, eliminating SXG issues. The code snippets for fixing particular problems are already available in my posts—they just need to be combined into a single, comprehensive, fire-and-forget solution, a super-fix worker.

Maintenance and monitoring

SXG is easy to break; therefore, it needs constant monitoring and maintenance. Here is the additional work you should do:

Create and maintain automated tests to cover basic problems like setting cookies or other prohibited headers, so that SXG won’t break when you add new features to your app.

Monitor if the critical production pages work at all (I created sxg-checker for that).

Monitor the performance degradation event rate on production, such as the failed prefetching of subresources, fallback client-side redirects, and loading SXG on-demand; the page-load-type library could be useful for detecting these cases; you can report them using the Application Performance Monitoring (APM) solution of your choice.

Not being new

Tech often chases the newest trends. Despite SXG's real advantages and ability to solve current problems, it's been around for a while now. Newer alternatives get more attention, which likely contributes to SXG's limited adoption.

Risks of implementing SXG

Stagnation

Both the technology and the ecosystem around it are stagnant:

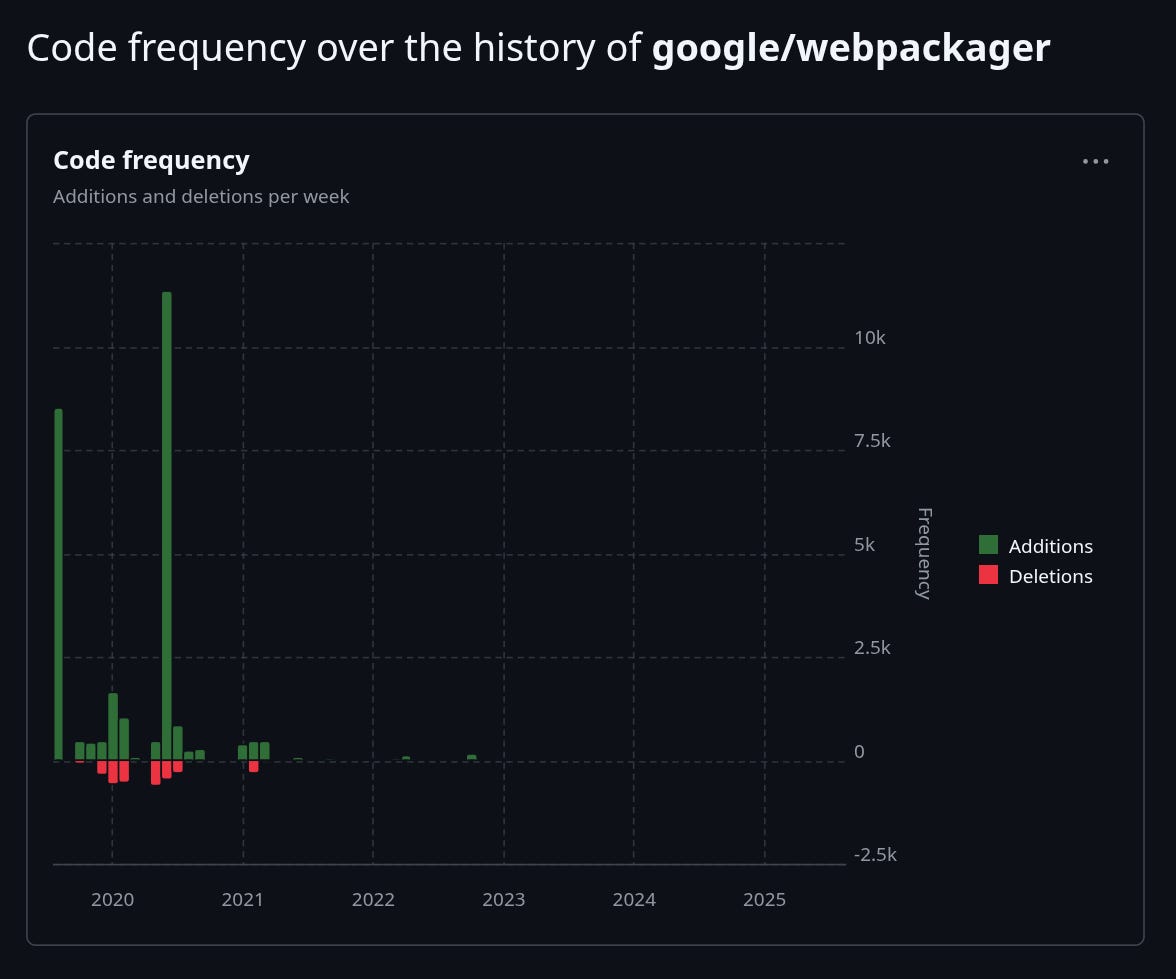

The SXG standard draft looks like it is stuck.

Lack of development: the open source projects seem abandoned. When it comes to Google's repositories, commits stopped at the end of 2022 as if the project was killed.

No new content on blogs and tech sites.

Forget about getting your SXG questions answered on Clouflare or Google forums.

Poor adoption

Only Google and Cloudflare embraced SXG.

Websites supporting SXG are rare. From those I could find, none supported subresources properly, so they would be better off by disabling SXG and using Speculation Rules prefetching instead.

Google is currently the only search engine that prefetches content using SXG. This differs significantly from AMP's adoption pattern across the industry.

When Google announced AMP support, Microsoft immediately followed suit the same year and eventually built its own AMP cache and viewer within two years2.

However, despite many years passing since Google introduced SXG, Bing has shown no interest in implementing this technology, leaving Google as the sole adopter among major search engines.

This creates a chicken-and-egg problem: Link aggregators don't use SXG because websites don't implement it, and websites don't implement it because link aggregators (except Google) don't use it.

Stagnation and poor adoption may lead to abandonment. I believe there is a non-zero risk of Google sunsetting SXG support. The fact that a 2-week global outage in 2024 went unnoticed by anyone except me—and wasn't addressed in Google's official communications—may increase the likelihood of future discontinuation.

Artificial Intelligence

As more people obtain information directly from large language models without visiting websites, prefetching becomes less critical. Meanwhile, Google—currently the only search engine supporting SXG—faces mounting pressure from competitors developing solutions that could make the traditional “10 blue links” search model obsolete.

Technically, I can imagine the chatbot interface prefetching links to sources using SXG. However, considering the adoption rate, it’s unlikely.

Reliance on Cloudflare

I haven't tried to self-host an SXG-enabled website. Given that the last commit to the SXG nginx module was at the end of 2021, I foresee issues with using it with the recent nginx versions.

Even if the module can be compiled and loaded by nginx, I’m sure there will be bugs affecting:

functionality, like the issues I discovered in the Cloudflare implementation,

security.

Since the code appears abandoned, I would avoid running it in production. The other option, Web Packager Server, is also unmaintained.

This makes Cloudflare the only option. In other words, depending on SXG makes you dependent on this company, classic vendor lock-in.

Having to trust Big Tech

Remember when I praised SXG for not allowing Google to alter the content because it's signed by the publisher? That's good, but there's another problem: Cloudflare holds the SXG signing keys, and if it chooses to, it can alter your website.

This illustrates the broader issue with Cloudflare. It provides amazing features, but requires you to proxy traffic through its servers. With access to plain text requests and responses, Cloudflare can do anything with them.

You can self-host your AMP pages, but you have to rely on Google to cache them, with the risk that Google might alter them. You don't need to worry about Google altering your SXG content, but you need to trust Cloudflare to generate it properly. In both cases, you must trust a big tech company.

Verdict

It works for me

If you ask me if it was worth implementing SXG in my specific case, I would answer: yes. Here is why:

Most of my traffic comes from Google; therefore, making a great first impression on my new users pays off.

I implemented SXG before Google deployed Speculation Rules prefetching on the desktop; therefore, my website’s performance improved significantly compared to its previous state.

The improvements on the desktop were probably even greater because of the TTFB Cloudflare issue that SXG masks.

SXG was the pretext for implementing edge caching, and at the same time, made it easier. Edge caching improved overall website speed, not only for Google-referrer visits. At the same time, it decreased server load.

Even if Google shuts down SXG prefetching or Chrome stops supporting it in the future, I already gained a lot in terms of user engagement and conversions due to being the fastest website in its category.

While it took a lot of work (mostly debugging), SXG worked well for my specific use case.

In addition, I learned a lot during the process, such as HTTP caching details, Cloudflare workers implementation, and various frontend tricks. At the same time, I sharpened my skills regarding analytics, website performance measurement, and black-box testing.

Will it work for you?

However, should I recommend it to you if most of your traffic comes from Google? It depends, as you have to balance all the pros and cons I described in this article.

If, in your specific situation, there are more drawbacks, then stick to Speculation Rules prefetching; it just works.

Otherwise, if you want to see how low your LCP can be, follow my SXG guide. I hope it helps you avoid my mistakes and significantly speed up your SXG implementation.

The changes I’d like to see

Some things could improve the current status quo. Here is my wishlist:

I'm not sure if it's technically feasible, but if Google could use Speculation Rules to prefetch SXG with a fallback to standard prefetching, we could have the best of both worlds. All the negative side effects of SXG would be eliminated and replaced by standard prefetching, resulting in significant performance improvements. Users concerned about battery drain and data usage would see improvements in both!

I'd love to see broader adoption across platforms: search engines beyond Google, LLM chatbots, social platforms like Reddit, and tech sites like Hacker News. WordPress supporting SXG out of the box would be particularly impactful given its massive market share.

If Cloudflare improved its SXG implementation to be more reliable, this guide would be much shorter.

Finally, I'd like to see a well-maintained, open-source self-hosting solution to enable smaller hosting companies and individual developers to implement SXG without relying on Cloudflare.

Congrats and thanks!

You made it to the end of this post, and if you read the previous posts too, you've finished the entire SXG series. I hope you like it, and I'd like to thank you for your time!

If you think this post might be valuable to someone else, please share it.

I don't plan to write more about SXG, but if you're into my other interests, subscribe below.

Even though AMP has been embraced by Microsoft, I was unable to find any AMP-enabled site that was pre-fetched or even loaded on demand when using Bing search on my mobile device.